Since Generative AI revolutionize varied industries, builders rapidly discover methods to attach massive language fashions (LLM) of their functions. Amazon Baderick is a strong answer. It affords a completely systematic service that gives entry to a variety of basis fashions by way of a united API. The important thing advantages of this information Amazon Bedrock, the best way to join totally different LLM fashions in your initiatives, facilitate the administration of various LLMs, simplify the usage of your software and make the Take into account wonderful strategies of manufacturing use The Greatest will search for one of the best methods to contemplate.

The important thing advantages of Amazon Baderick

The Amazon Badrick has simplified the preliminary integration of LLM in any software by offering all the fundamental capabilities wanted to start out.

Quick access to properly -known fashions

The bedrock business supplies entry to excessive -performing basis fashions reminiscent of AI11 labs, Entropic, Koheer, Meta, Mistress AI, Stability AI, and Amazon. This kind permits builders to pick out the extremely appropriate mannequin for his or her use and alter the fashions while not having a number of vendor relationships or APIS.

Absolutely organized and lace server

As a completely systematic service, the Badric Eliminates the necessity for infrastructure administration. This permits builders to concentrate on the development of functions somewhat than worrying about infrastructure setup, mannequin deployment and fundamental issues of scaling.

Enterprise grade safety and privateness

The Baderick Belt affords these security measures, guaranteeing that the info by no means leaves your AWS surroundings and is secretly confidential. It additionally helps compliance with varied requirements, together with ISO, SOC, and HIPAA.

Be the newest with enchancment within the newest infrastructure

Bedrock repeatedly releases new options that advance the bounds of LLM functions and doesn’t want it. For instance, it has not too long ago launched a greater infections that enhance the LLM in -conference delays with out compromising on accuracy.

To start out with the bedrock

On this part, we’ll use AWS SDK for a small software to make a small software in your native machine, offering a information to start out with Amazon Baderick. This may make it easier to perceive the sensible features of the usage of badicks and methods to combine it into your plans.

Provisions

- Your AWS account.

- You could have put in. If not put in, get it by following this information.

- You could have the AWS SDK (Boto3) put in and shaped correctly. It’s endorsed to create an AWS IAM consumer who can use Boto3. Directions can be found within the Boto3 Fast Begin Information.

- If the IAM consumer is utilizing, be sure you add

AmazonBedrockFullAccessCoverage for this. You possibly can connect insurance policies utilizing the AWS console. - Observe this information and request entry to 1 or extra fashions on the bedrock.

1. Badrick consumer

Baderick has a number of purchasers accessible in AWS CDK. Bedrock The consumer enables you to work together with the service to make and handle them, whereas BedrockRuntime The consumer lets you request present fashions. We are going to use one of many present off -the -shelf Basis mannequin for our tutorial, so we’ll simply work with it BedrockRuntime International locations

import boto3

import json

# Create a Bedrock consumer

bedrock = boto3.consumer(service_name="bedrock-runtime", region_name="us-east-1") 2. To request the mannequin

On this instance, I’ve used the Amazon Nova Micro Mannequin (Mannequin ID amazon.nova-micro-v1:0), One of many least expensive fashions of Badrick. We are going to present a straightforward indication to compile a poem from the mannequin and to offer output size and the extent of creativity (known as “temperature”)). Be happy to see how they play with totally different indicators and tune parameters to see how they have an effect on the output.

import boto3

import json

# Create a Bedrock consumer

bedrock = boto3.consumer(service_name="bedrock-runtime", region_name="us-east-1")

# Choose a mannequin (Be happy to mess around with totally different fashions)

modelId = 'amazon.nova-micro-v1:0'

# Configure the request with the immediate and inference parameters

physique = json.dumps({

"schemaVersion": "messages-v1",

"messages": ({"function": "consumer", "content material": ({"textual content": "Write a brief poem a couple of software program growth hero."})}),

"inferenceConfig": {

"max_new_tokens": 200, # Regulate for shorter or longer outputs.

"temperature": 0.7 # Improve for extra creativity, lower for extra predictability

}

})

# Make the request to Bedrock

response = bedrock.invoke_model(physique=physique, modelId=modelId)

# Course of the response

response_body = json.masses(response.get('physique').learn())

print(response_body)As proven under, we will additionally attempt with every other mannequin like anthropic hiku.

import boto3

import json

# Create a Bedrock consumer

bedrock = boto3.consumer(service_name="bedrock-runtime", region_name="us-east-1")

# Choose a mannequin (Be happy to mess around with totally different fashions)

modelId = 'anthropic.claude-3-haiku-20240307-v1:0'

# Configure the request with the immediate and inference parameters

physique = json.dumps({

"anthropic_version": "bedrock-2023-05-31",

"messages": ({"function": "consumer", "content material": ({"sort": "textual content", "textual content": "Write a brief poem a couple of software program growth hero."})}),

"max_tokens": 200, # Regulate for shorter or longer outputs.

"temperature": 0.7 # Improve for extra creativity, lower for extra predictability

})

# Make the request to Bedrock

response = bedrock.invoke_model(physique=physique, modelId=modelId)

# Course of the response

response_body = json.masses(response.get('physique').learn())

print(response_body)Be aware that the applying/response buildings are barely totally different among the many fashions. That is an error that we are going to handle utilizing the default immediate templates within the subsequent part. To expertise with different fashions L you, yow will discover modelId And from the “Mannequin Catalog” web page within the Badrick console, pattern API functions for every mannequin and tune your code accordingly. Some fashions even have detailed AWS written leaders, which yow will discover right here.

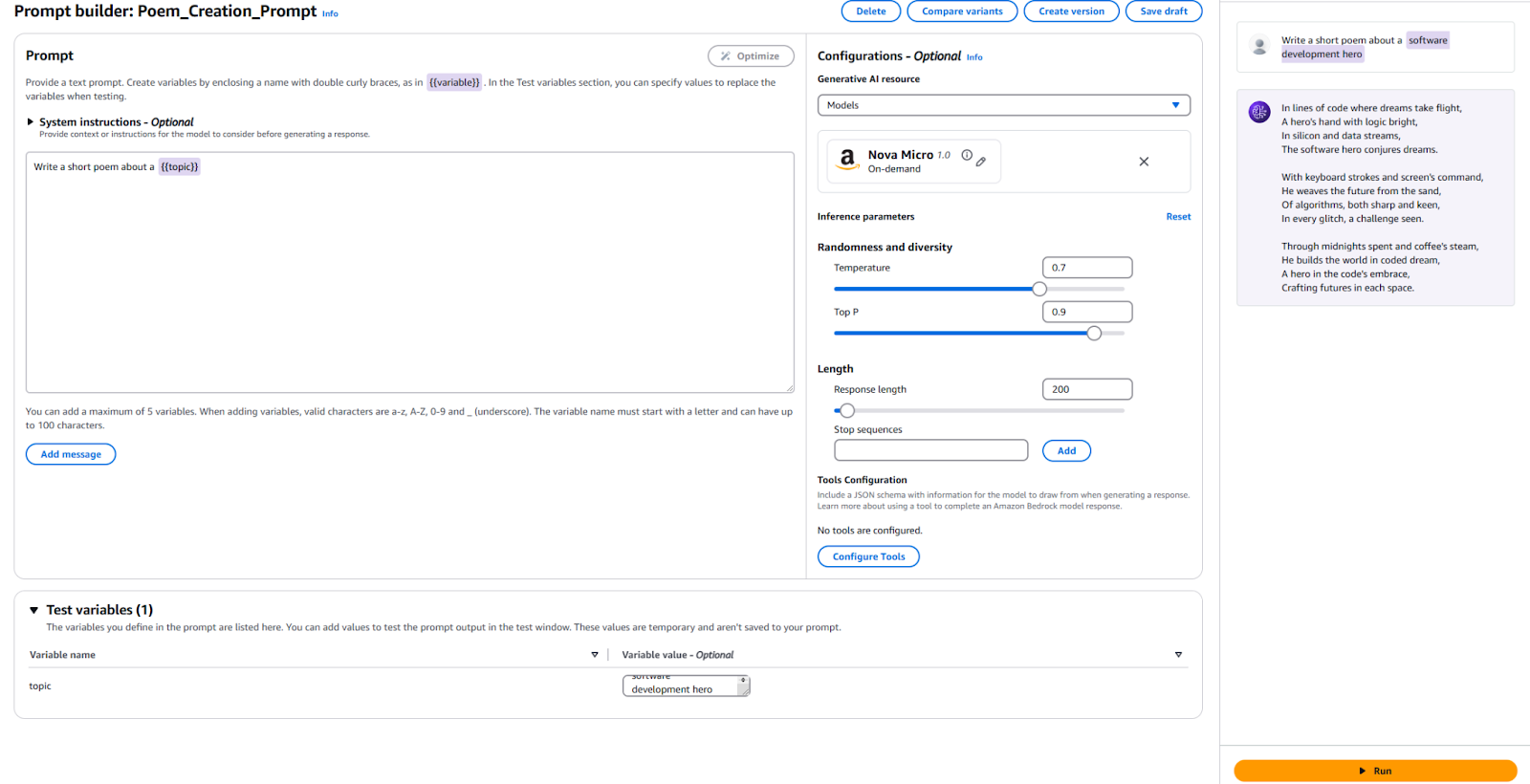

3. Utilizing fast administration

Bederick supplies a nifty software to make and expertise default -default -default templates. As a substitute of explaining the token size or temperature reminiscent of indicators and particular parameters in your code, you may instantly create default templates within the administration console. You clarify the enter variables that can be injected through the run -time, arrange all of the required enter parameters, and publish a model of your indicator. As soon as you’re employed, your software code can request the required model of your fast template.

Key advantages of use of default indicators:

- This helps your software maintain organized because it makes use of totally different indicators, parameters and totally different indicators, parameters and fashions for various use points.

- If the identical indication is utilized in a number of locations, it helps reuse instantly.

- Abstract removes the small print of the LLM Incration from our software code.

- Instantaneous engineers permit for fast correction within the console with out touching your authentic software code.

- It permits to be simply skilled, which takes benefit of various variations of the indicator. You possibly can adapt to fast enter, temperature reminiscent of parameters, and even fashions your self.

Let’s attempt it now:

- Transfer the Badrick Console and click on on the left panel on “Immediate Administration”.

- Click on “Create the Prampot” and provides your new immediate a reputation

- Enter the textual content that we need to ship to the LLM with a spot holder variable. I used to make use of

Write a brief poem a couple of {{subject}}. - Within the Configure part, clarify what mannequin you need to use and set the values of the identical parameters we use first, reminiscent of “temperature” and “Max token”. For those who choose, you may depart as defaults.

- It is time to take a look at it! On the backside of the web page, present a worth on your take a look at variable. I used the “software program growth hero”. Then, click on on the fitting to see if you’re pleased with the output to see the fitting.

For reference, listed here are my construction and outcomes.

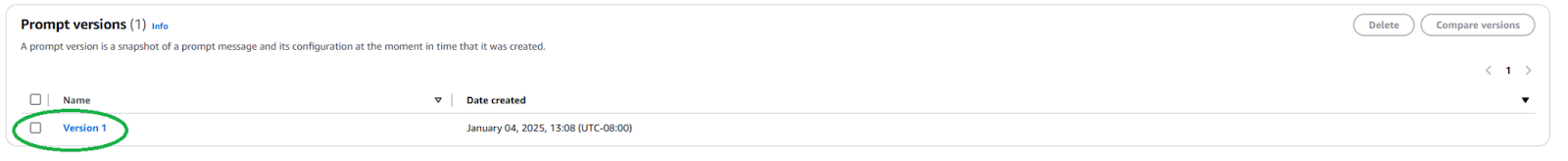

We have to publish a brand new fast model to make use of this immediate in your software. To do that, click on the “Create Model” button above. This creates a snapshot of your present sequence. If you wish to play with it, you may edit and create extra model.

As soon as printed, we have to discover the short model (Amazon Useful resource title) by navigation on the web page on your indicator and clicking the newly created model.

Copy the Arn of this particular immediate model to make use of in your code.

As soon as we’ve arin, we will replace our code to emphasise this default indicator. We simply want the short model of the model and the values of any variable that we inject it.

import boto3

import json

# Create a Bedrock consumer

bedrock = boto3.consumer(service_name="bedrock-runtime", region_name="us-east-1")

# Choose your immediate identifier and model

promptArn = ""

# Outline any required immediate variables

physique = json.dumps({

"promptVariables": {

"subject":{"textual content":"software program growth hero"}

}

})

# Make the request to Bedrock

response = bedrock.invoke_model(modelId=promptArn, physique=physique)

# Course of the response

response_body = json.masses(response.get('physique').learn())

print(response_body) As you may see, it simplifies our software code by summarizing LLM prognosis particulars and selling reuse. Play freely play with the parameters inside your indicators, make totally different variations, and use them in your software. You possibly can prolong it right into a easy command line software that takes the consumer’s enter and writes a brief poem on the topic.

Subsequent steps and one of the best motion

As soon as you’re feeling relieved to make use of badick to attach the LLM in your software, uncover some sensible issues and finest strategies to organize your software Prepared to be used of manufacturing.

Instantaneous engineering

The sign you employ to ask for the mannequin could make or break your request. Instantaneous engineering is the method of creating and bettering directions to get the specified output from LLM. With the default immediate templates, expert immediate engineers can begin with fast engineering with out interfering with the software program growth means of your software. Chances are you’ll want to organize your indicator to be particular to the mannequin you need to use. Know your self with particular fast strategies for every mannequin supplier. Baderick normally supplies some tips for giant fashions.

The number of the mannequin

Making the fitting mannequin is a stability between your software necessities and price necessities. Extra succesful fashions are dearer. In all circumstances of use, a really highly effective mannequin doesn’t require, whereas the most cost effective fashions can’t at all times present your efficiency. Use the mannequin prognosis characteristic to right away take a look at and examine the outpttes of various fashions to search out out which one meets your wants. Badrick affords a number of choices to add take a look at datases and consider the mannequin’s accuracy for particular person use issues.

Advantageous tone and improve your mannequin with rags and brokers

If the off -shelf mannequin doesn’t work to your Sufficient sufficient, the Badrick affords the choices to arrange its mannequin by way of its particular use. Make your coaching knowledge, add it to the S3, and use the Badric Console to start out a superb firming job. To enhance the efficiency of particular use issues, you may also improve your fashions utilizing strategies reminiscent of Era (RAG). Join present knowledge sources that Baderick will make the mannequin accessible to reinforce his information. The Baderick additionally affords the flexibility to plan and implement advanced multi -phase duties utilizing your present firm system and knowledge sources.

Safety and guard

With the assistance of guards, you may make sure that your generative software avoids delicate matters (eg, racism, sexual content material and dishonesty) and that the created materials is based to stop deception Has gone This characteristic is essential to take care of the ethical {and professional} requirements of your functions. Make the most of Bedrock’s constructed -in security measures and join them along with your present AWS safety controls.

Value correction

Earlier than releasing your software or characteristic extensively, take into account the fee that the bedrock will elevate the vein such because the extension and the extension.

- For those who can predict your site visitors samples, take into account the usage of throtives utilized by supplying for a extra environment friendly and price -effective mannequin prognosis.

- In case your software comprises quite a few options, you need to use totally different fashions and indicators for every characteristic to enhance prices on particular person grounds.

- Together with the selection of your mannequin, overview the sizes offered for every prognosis. Badrick normally costs on costs on “per token” foundation, so lengthy indicators and large outcomes will price extra.

Conclusion

Amazon Badrick is a strong and versatile platform to combine LLM into functions. It supplies entry to many fashions, facilitates progress, and supplies sturdy customization and protecting properties. On this method, the builders can use the facility of Generative A, specializing in creating worth for his or her clients. This text describes the best way to begin with a necessary badges integration and maintain our gestures organized.

As AI Arloos, builders needs to be up to date with the newest options and wonderful strategies in Amazon Baderick to construct their AI functions.