In terms of exposing providers from a Kubernetes cluster and making it accessible from outdoors the cluster, the really helpful choice is to make use of a load-balancer sort service to redirect incoming site visitors to the fitting pod. In a bare-metal cluster, utilizing this kind of service leaves the EXTERNAL-IP subject of the service as pending, making it unavailable outdoors of the cluster. Comparatively, this can be a native function of Cloud managed clusters. Let’s learn how to realize an identical end in a bare-metal cluster.

For the previous few years, managed cloud Kubernetes clusters presents have thrived with the continual progress of Amazon EKS, Google GKE and Microsoft Azure AKS. We like tinkering and experimenting, and we discover it vital to have flexibility in the case of the best way we orchestrate our clusters. We opted for a bare-metal cluster as they arrive with improved efficiency because of decrease community latency and an general quicker I/O.

As our cluster is just not cloud-dependent, one essential function that our cluster lacks is load-balancing. Load-balancers are accountable for redirecting incoming community site visitors to the node internet hosting the pods. They redistribute the site visitors load of the cluster, offering an efficient solution to forestall node overload. They permit for a single level of contact between the community and the cluster.

To implement this performance within the cluster, a pure software program answer exists: MetalLB. It presents load-balancing for exterior providers and, mixed with an ingress controller, it gives a stateful layer between the community and the providers. Why is it stateful? Providers uncovered by way of the ingress controller might change, nodes might fail or could also be added, however the entry is at all times accomplished by way of the identical IP deal with linked with the service exposing the ingress controller pod, with out dropping observe of what’s accessible or not.

Solely utilizing an ingress controller would imply that you have to expose a node to make your providers accessible, successfully making a single level of failure to your cluster if this node goes down. In MetalLB’s Layer2 mode, the IP deal with of the service is not exposing a node. Furthermore, a node failover is supplied, which means that if the node on which the site visitors is redirected falls, one other node replaces it.

We’re going to see how MetalLB and nginx-ingress consequence within the implementation of a load-balancer in a bare-metal Kubernetes cluster.

Stipulations

Earlier than starting the reasons, we have to be sure that your cluster follows the requirements:

- A Kubernetes cluster operating Kubernetes 1.13.0 or increased;

- A CNI plugin suitable with MetalLB (see MetalLB CNI compatibility list);

- Canal;

- Cilium;

- Flannel;

- Kube-ovn;

- Calico (largely);

- Kube-router (largely);

- Weave-net (largely).

- Distinctive IPv4 addresses from the community of your cluster to configure IP deal with swimming pools;

- UDP and TDP site visitors on port 7946 should be allowed between nodes as MetalLB makes use of the Go Memberlist.

MetalLB should be deployed on the control-plane. The management airplane manages the assets of the cluster. For low scale clusters, it refers back to the grasp node which acts because the orchestrator of the scheduling of the pods, choosing which node to assign the pods on, and managing their lifecycle.

The deployment of MetalLB on the control-plane creates pods for the next assets:

- MetalLB controller;

- MetalLB audio system.

The controller pod manages the load-balancer configuration by utilizing one of many IP deal with swimming pools supplied by the person, and distributing the IP addresses from the chosen pool to the load-balancer sort providers.

Then, the speaker pods are deployed with their corresponding listeners and function bindings. Every employee node receives a speaker pod, and one is elected utilizing the memberlist to be liable for routing the site visitors. If the node containing the pod is down, one other pod in one other node is then elected to take its place.

MetalLB has three modes:

- Layer2;

- BGP;

- FRR (experimental).

On this tutorial, we’re utilizing the Layer2 mode. It entails utilizing the Address Resolution Protocol (ARP) to resolve the MAC addresses of the nodes with IPv4 addresses.

Notice: In case your cluster makes use of IPv6 addresses, this mode makes use of the Neighbor Discovery Protocol (NDP) as a substitute.

In distinction, the BGP mode, for Border Gateway Protocol, exchanges data with BGP friends within the cluster to provision the load-balancer sort providers with an deal with from one of many IP deal with swimming pools.

Lastly, the FRR mode is an extension of the BGP mode, permitting for Bidirectional Forwarding Detection assist, making it suitable with IPv6 addresses.

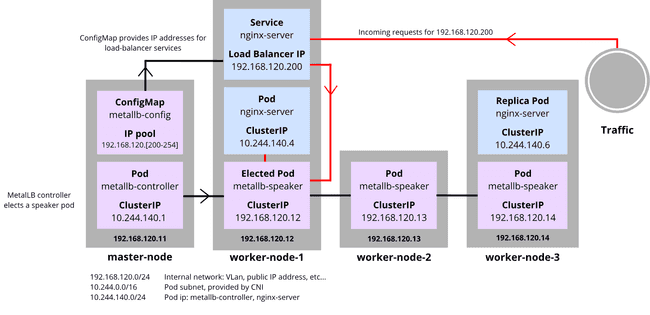

Every mode has its perks and its downsides. The Layer2 mode is attention-grabbing as a result of it gives node failover and redundancy. Additionally it is probably the most simply configurable mode. Nonetheless, there are two downsides: bottlenecking and sluggish failover. To go extra in-depth, this schema represents how MetalLB works with the Layer2 mode. You will need to be aware that some assumptions are presumed.

Let a cluster, composed of one grasp node and three employee nodes with:

- the MetalLB controller particularly requested to deploy on the grasp node by modifying the manifest;

- an nginx server deployment of two replicas and its corresponding uncovered service;

- a Host Community similar to this subnet:

192.168.120.0/24.

The controller generates the load-balancer IP of a load-balancer sort service by choosing the primary unused deal with of the supplied IP deal with pool. The IP deal with pool should be composed of a number of distinctive IP addresses from the Host Community.

When knowledge comes by way of the load-balancer IP, it’s redirected to the elected speaker pod. On this instance, the metallb-speaker of the worker-node-1 was elected, permitting the pod to unfold the data with kube-proxy, searching for the pod to entry.

If the speaker pod node goes down, one other speaker assumes its place. If we determined so as to add a second service of load-balancer sort, its load-balancer IP can be 192.168.120.201, the subsequent instantly obtainable IP.

Notice: This mode doesn’t implement true load-balancing, as all site visitors is directed in the direction of the node with the elected speaker pod. BGP and FRR modes are extra environment friendly for this example, as they actually dispatch the site visitors as a cloud load-balancer would.

Tutorial

Step 1: Set up of MetalLB

To follow the set up of MetalLB in your native atmosphere, the next manifests should be deployed on the control-plane:

namespace.yamlcreates a MetalLB namespace;metallb.yamldeploys the MetalLB controller and audio system, in addition to the function bindings and listeners which can be wanted.

kubectl apply -f https://uncooked.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

kubectl apply -f https://uncooked.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yamlAs soon as MetalLB is put in, we should configure an IP deal with pool. By deploying it, the controller allocates IP addresses for the load-balancer. The IP deal with pool permits for one or many IP addresses, relying on what we’d like. The allotted addresses should be a part of the host community of our cluster and distinctive.

apiVersion: v1

type: ConfigMap

metadata:

namespace: metallb-system

identify: config

knowledge:

config: |

address-pools:

- identify: default

protocol: layer2

addresses:

- {ip-start}-{ip-stop}Save this file underneath the identify config-pool.yaml and easily apply it utilizing kubectl apply -f config-pool.yaml.

Notice: It’s attainable to deploy a couple of IP deal with pool.

Step 2: Testing

To keep away from any confusion as a result of identify of the server we deploy, within the remaining sections of the article, we identify:

- nginx-ingress as

nginx-ingresswhich is the ingress controller that exposes providers; - nginx server picture as

nginx-serverwhich is an online server similar to a take a look at service that we expose;

We deploy and expose nginx-server on a node to check if the set up of MetalLB was profitable:

kubectl create deploy nginx --image nginx

kubectl expose deploy nginx --port 80 --type LoadBalancerTo entry the load-balancer sort service, we have to retrieve the EXTERNAL-IP of the nginx-server.

INGRESS_EXTERNAL_IP=`kubectl get svc nginx -o jsonpath='{.standing.loadBalancer.ingress[0].ip}'`

curl $INGRESS_EXTERNAL_IPThat is the specified consequence:

DOCTYPE html>

html>

head>

title>Welcome to nginx!title>

fashion>

html { color-scheme: mild darkish; }

physique { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

fashion>

head>

physique>

h1>Welcome to nginx!h1>

p>If you happen to see this web page, the nginx internet server is efficiently put in and

working. Additional configuration is required.p>

p>For on-line documentation and assist please check with

a href="http://nginx.org/">nginx.orga>.br/>

Business assist is accessible at

a href="http://nginx.com/">nginx.coma>.p>

p>em>Thanks for utilizing nginx.em>p>

physique>

html>To wash up what we used throughout testing, we delete what we created:

kubectl delete deployment nginx

kubectl delete svc nginxWithin the earlier half, we managed to deploy a service and make it accessible outdoors of the cluster. Nonetheless, it isn’t passable as a result of a special IP deal with is allotted each time we deploy a service. We want to have the ability to entry each service from a single IP deal with. To realize this, we use nginx-ingress.

Ingresses are a solution to expose providers outdoors of the cluster. It permits for hosts and routes to be created, thus permitting DNS resolutions of IP addresses. nginx-ingress listens and manages each service uncovered on ports 80 and 443. It permits for 2 or extra providers to be uncovered on the identical IP deal with, utilizing completely different hosts which implies that a single IP is successfully used.

In cloud Kubernetes clusters, ingresses are uncovered by way of load-balancers. In naked metallic clusters, ingresses are often uncovered by way of NodePort or Host Community, which is inconvenient as a result of it exposes a node of your cluster, thus making a single level of failure. If this node goes down, the entry to the providers accessible by way of this node defaults on one other node, which means that you have to change the IP deal with that you simply beforehand used, to a different one in all your nodes IP deal with.

Utilizing each MetalLB and nginx-ingress permit us to get even nearer to a correct load-balancer by layering the entry to the providers. The IP deal with stays the identical, even when a node goes down because of the failover mechanism of MetalLB, and the providers at the moment are centralized because of nginx-ingress.

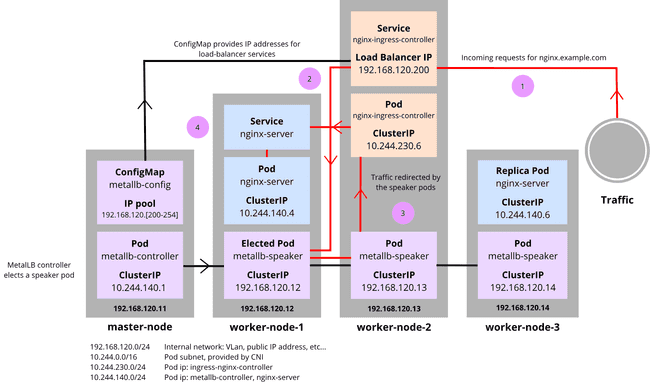

Utilizing the beforehand set assumptions, we replace the diagram as such:

ConfigMap gives the IP pool to the load-balancer providers, and the controller elects a speaker pod, as seen beforehand.

1: A request arrives on http://nginx.instance.com. It’s resolved because the load-balancer IP of the nginx-ingress-controller: 192.168.120.200.

2: Visitors exits the service to the elected metallb-speaker which communicates with the opposite metallb-speaker pods to redirect the site visitors to the nginx-ingress-controller pod.

3: Visitors goes to the nginx-ingress-controller pod.

4: nginx-ingress-controller pod sends the site visitors to the service that was requested with the hostname nginx.instance.com. nginx-server is contacted and serves the web site to the skin shopper.

Step 1: Set up of nginx-ingress

We deploy the next manifest on a control-plane node:

kubectl apply -f https://uncooked.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.2.0/deploy/static/supplier/cloud/deploy.yamlStep 2: Verification

Utilizing this command, we examine if the EXTERNAL-IP subject is pending or not.

kubectl get service ingress-nginx-controller --namespace=ingress-nginxUsually, the primary deal with of the config-map ought to be allotted to the ingress-nginx-controller.

Sensible instance

To check out our new set up, we deploy two providers with out specifying the kind which defaults to ClusterIP. First, nginx-server:

- Create a deployment;

- Expose the deployment;

- Create an ingress on the

nginx.instance.comhostname.

kubectl create deployment nginx --image=nginx --port=80

kubectl expose deployment nginx

kubectl create ingress nginx --class=nginx

--rule nginx.instance.com/=nginx:80Comply with the identical steps for the deployment of an httpd server, which has similarities to nginx-server and is solely a service that we expose, appearing as a “Whats up World”, on the httpd.instance.com hostname:

kubectl create deployment httpd --image=httpd --port=80

kubectl expose deployment httpd

kubectl create ingress httpd --class=nginx

--rule httpd.instance.com/=httpd:80We will entry the web sites by way of the host pc to see if it was uncovered appropriately. To make it simpler to entry the web sites, we have to add DNS guidelines by appending the /and so on/hosts file on the host pc. We receive the ingress-nginx-controller EXTERNAL-IP subject:

INGRESS_EXTERNAL_IP=`kubectl get svc --namespace=ingress-nginx ingress-nginx-controller -o jsonpath='{.standing.loadBalancer.ingress[0].ip}'`

echo $INGRESS_EXTERNAL_IPAfter retrieving the IP, we put the next line in /and so on/hosts, changing the INGRESS_EXTERNAL_IP variable with the one beforehand copied: $INGRESS_EXTERNAL_IP nginx.instance.com httpd.instance.com.

Now, accessing the providers is completed on the identical IP deal with utilizing DNS. With curl, checking if the pages are working as supposed is completed by curling the nginx.instance.com and httpd.instance.com hostnames, or by opening your web explorer with the hostnames on the host pc.

curl nginx.instance.com

curl httpd.instance.comIn case altering /and so on/hosts is just not attainable, curling with a header indicating the hostname on the host pc in an alternate choice:

curl $INGRESS_EXTERNAL_IP -H "Host: nginx.instance.com"

curl $INGRESS_EXTERNAL_IP -H "Host: httpd.instance.com"You anticipate to see “It really works” from httpd.instance.com and the identical factor as in the testing of MetalLB from nginx.instance.com.

To wash up what we used throughout testing, we delete what we created:

kubectl delete deployment nginx

kubectl delete svc nginx

kubectl delete ingress nginx

kubectl delete deployment httpd

kubectl delete svc httpd

kubectl delete ingress httpdFrequent points

Generally, nginx-ingress has an issue associated to internet hook notifications: Inside error occurred: failed calling webhook "validate.nginx.ingress.kubernetes.io".

First, confirm that each one nginx-ingress pods are up and operating. It’s essential to look forward to them to be prepared, and that is accomplished by utilizing the kubectl wait --for=situation=prepared --namespace ingress-nginx --timeout=400s --all pods command.

If this didn’t resolve the issue, it was talked about on this GitHub issue which dictates {that a} easy workaround is to delete the validating webhook configuration like so: kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission.

Conclusion

Utilizing a load-balancer within the type of MetalLB and utilizing it in tandem with nginx-ingress to create a single level of contact for the providers is now inside your attain. It’s handy to make use of them as a failover mechanism for bare-metal and on-premise clusters. They’re easy-to-use softwares, which, though they arrive with some limitations for the CNI, present an ideal person expertise, and are very simple to deploy.

Every part is just not good, because it additionally comes with a sluggish response time, in addition to attainable bottlenecks because of the truth that it isn’t a real load-balancer distributing packets. It’s nonetheless a great tool that makes life general simpler.